3.3 Commodity Clusters

The third major paradigm in parallel architecture emerged from the convergence of economic and software trends. While established vendors focused on highly specialized, proprietary supercomputers, a movement in the academic and research communities demonstrated that high-performance computing (HPC) could be achieved by aggregating commodity-off-the-shelf (COTS) components. This approach, known as commodity clustering, increased access to supercomputing.

Foundational Trends in the Early 1990s

Section titled “Foundational Trends in the Early 1990s”Two key developments enabled the rise of commodity clusters:

-

Commodity Microprocessor Performance: The competitive personal computer market drove rapid performance growth in commodity microprocessors. Following Moore’s Law, the performance of processors from companies like Intel doubled every 18-24 months. By the early 1990s, the performance of these inexpensive processors began to approach that of processors used in high-end workstations.1

-

Growth of Open-Source Software: The growth of open-source software, particularly the Linux operating system, provided a free and customizable alternative to the proprietary operating systems of traditional supercomputer vendors.2 Linux gave system builders significant control over the software stack, allowing them to optimize it for parallel execution.3

Case Study: The Beowulf Project (1994)

Section titled “Case Study: The Beowulf Project (1994)”These trends were demonstrated in 1994 at NASA’s Goddard Space Flight Center by the Beowulf project, led by Thomas Sterling and Donald Becker.

Thomas Sterling, co-inventor of the Beowulf cluster, with the Naegling cluster at Caltech (1997). Naegling was the first cluster to achieve 10 gigaflops of sustained performance. Credit: NASA.

Project Goal: To build a machine capable of one gigaflop (10^9 floating-point operations per second) for a budget of less than $50,000—an order of magnitude less than the cost of a comparable commercial system at the time.2

The project’s innovation was not in creating new hardware, but in systems integration. The Beowulf design involved three key elements:2

- Commodity Hardware: 16 standard PCs, each with an Intel 486DX4 processor.

- Commodity Network: A standard 10 megabit-per-second Ethernet network to connect the nodes.

- Commodity Software: The Linux operating system, along with open-source message-passing libraries like Parallel Virtual Machine (PVM) and later MPI, to enable the individual nodes to function as a single, cohesive parallel machine.4

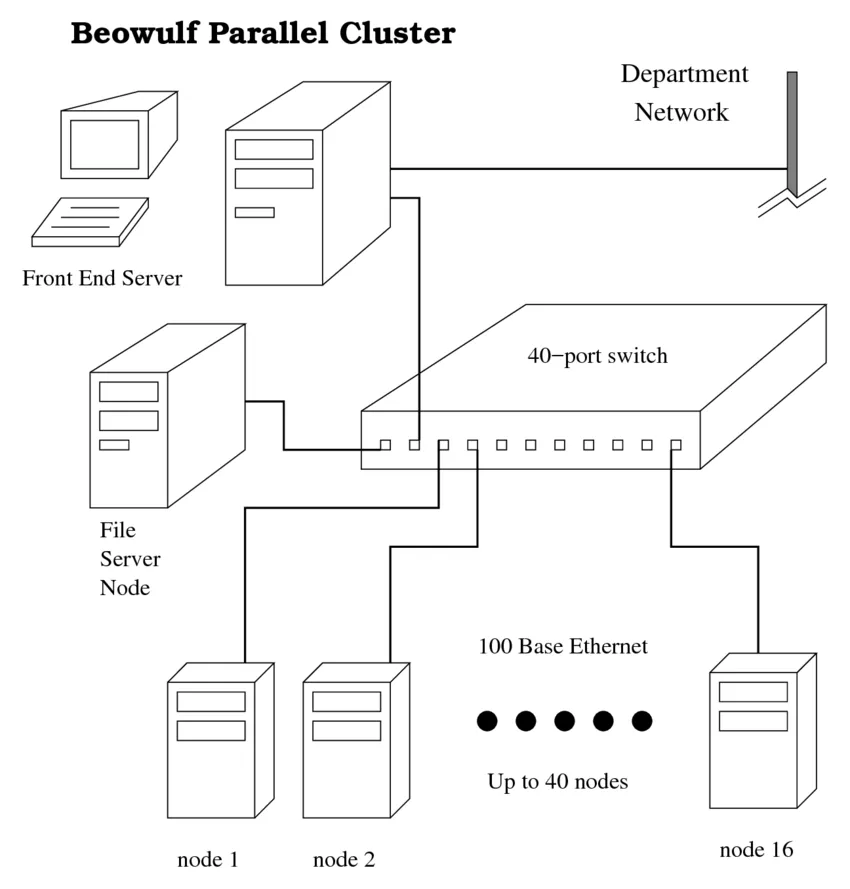

A typical Beowulf cluster architecture, featuring a front-end server, a file server, and multiple compute nodes connected via a commodity Ethernet switch. Credit: Yeo-Yie Charng.

The Beowulf project demonstrated that a collection of inexpensive components could deliver high performance at a lower cost than traditional supercomputers.

Comparative Analysis: Beowulf vs. Proprietary MPP

Section titled “Comparative Analysis: Beowulf vs. Proprietary MPP”The different approach of the Beowulf model is evident when compared to a contemporary Massively Parallel Processing (MPP) system like the Cray T3D.

| Feature | Beowulf Cluster (1994) | Cray T3D (1993) |

|---|---|---|

| Processors | 16x Commodity Intel 486DX4 (100 MHz)2 | 32 to 2048x Custom DEC Alpha 21064 (150 MHz) |

| Peak Performance | ~1 GFLOPS2 | Up to 300 GFLOPS (highly configuration dependent) |

| Interconnect | Commodity 10 Mbit/s Ethernet2 | Custom, high-bandwidth 3D Torus network |

| System Software | Open-source (Linux, PVM/MPI)4 | Proprietary (UNICOS MAX OS) |

| Approximate Cost | < $50,0002 | Millions of dollars |

| Development Model | Integration of COTS components | Custom design and manufacturing of all components |

Table 1: A comparison of the Beowulf commodity cluster with a proprietary MPP system from the same era, highlighting the difference in cost and design philosophy.

The Cluster Computing Model

Section titled “The Cluster Computing Model”The success of Beowulf established an influential model for building supercomputers, characterized by:

- Price-to-Performance: By leveraging the economies of scale of the PC industry, clusters offered a price-to-performance ratio that proprietary systems could not match.5

- Accessibility: University departments, research labs, and smaller companies could now afford to build and operate their own supercomputers, leading to broader access to HPC.5

- A Supporting Ecosystem: A community emerged, sharing expertise and contributing to the open-source software stack that powered these clusters.6

This model represented a shift in focus, from designing custom hardware to designing the system architecture and software needed to efficiently manage thousands of commodity processors.

Evolution of Cluster Technology

Section titled “Evolution of Cluster Technology”The commodity cluster was not a static concept; it evolved by continuously incorporating advances from the mainstream market.

- Processor Evolution: As seen in the LoBoS (Lots of Boxes on Shelves) cluster at the National Institutes of Health (NIH), clusters were regularly upgraded to the latest commodity processors, moving from Pentium Pro to Pentium II, and later to AMD Athlon and Opteron chips.7

- Interconnect Evolution: Early clusters were often limited by the high latency and low bandwidth of standard Ethernet. This led to the development of specialized, high-performance interconnects like Myrinet and, later, InfiniBand. As these technologies matured and their costs decreased, they were integrated into clusters, significantly improving performance for tightly coupled parallel applications and reducing the gap with proprietary interconnects.7

Standardization: The Message Passing Interface (MPI)

Section titled “Standardization: The Message Passing Interface (MPI)”A critical element in the dominance of the cluster model was the standardization of the programming model.

The Problem: In the early days of parallel computing, software was often tied to a specific vendor’s hardware, creating a portability problem. A program written for a Thinking Machines CM-5 would not run on a Cray T3D.

To solve this, a consortium of over 40 organizations from academia and industry collaborated to create a standard for message-passing programming.8 The result was the Message Passing Interface (MPI), with version 1.0 released in 1994.9

MPI provides a portable, vendor-neutral Application Programming Interface (API) for parallel programming on distributed-memory systems. Its key contributions include:

- Portability: An MPI program could run, with little or no modification, on virtually any parallel machine, from a multi-million dollar MPP to a self-built Beowulf cluster.10

- Decoupling: It decoupled parallel software development from specific hardware platforms.

- Ecosystem Growth: It fostered a rich ecosystem of scientific applications, libraries, and tools that could be widely shared and utilized.

MPI became the de facto standard for HPC, providing the software framework that supported the wide adoption of cluster computing.10

References

Section titled “References”Footnotes

Section titled “Footnotes”-

Commodity computing - Wikipedia, accessed October 2, 2025, https://en.wikipedia.org/wiki/Commodity_computing ↩

-

Computing with Beowulf Parallel computers built out of mass-market …, accessed October 2, 2025, https://ntrs.nasa.gov/api/citations/19990025448/downloads/19990025448.pdf ↩ ↩2 ↩3 ↩4 ↩5 ↩6 ↩7

-

The Roots of Beowulf - NASA Technical Reports Server (NTRS), accessed October 2, 2025, https://ntrs.nasa.gov/api/citations/20150001285/downloads/20150001285.pdf ↩

-

History of computer clusters - Wikipedia, accessed October 2, 2025, https://en.wikipedia.org/wiki/History_of_computer_clusters ↩ ↩2

-

The History of Cluster HPC » ADMIN Magazine, accessed October 2, 2025, https://www.admin-magazine.com/HPC/Articles/The-History-of-Cluster-HPC ↩ ↩2

-

What’s Next in High-Performance Computing? - Communications of the ACM, accessed October 2, 2025, https://cacm.acm.org/research/whats-next-in-high-performance-computing/ ↩

-

The LoBoS Cluster - The Laboratory of Computational Biology, accessed October 2, 2025, https://www.lobos.nih.gov/LoBoS-history.shtml ↩ ↩2

-

en.wikipedia.org, accessed October 2, 2025, https://en.wikipedia.org/wiki/Message_Passing_Interface#:~:text=The%20message%20passing%20interface%20effort,%2C%201992%20in%20Williamsburg%2C%20Virginia. ↩

-

Message Passing Interface - Wikipedia, accessed October 2, 2025, https://en.wikipedia.org/wiki/Message_Passing_Interface ↩

-

Message Passing Interface (MPI), accessed October 2, 2025, https://wstein.org/msri07/read/Message%20Passing%20Interface%20(MPI).html ↩ ↩2